Alongside has massive plans to interrupt destructive cycles earlier than they flip medical, stated Dr. Elsa Friis, a licensed psychologist for the corporate, whose background contains figuring out autism, ADHD and suicide threat utilizing Giant Language Fashions (LLMs).

The Alongside app at the moment companions with greater than 200 faculties throughout 19 states, and collects scholar chat information for his or her annual youth psychological well being report — not a peer reviewed publication. Their findings this yr, stated Friis, have been shocking. With nearly no point out of social media or cyberbullying, the coed customers reported that their most urgent points needed to do with feeling overwhelmed, poor sleep habits and relationship issues.

Alongside boasts optimistic and insightful information factors of their report and pilot examine carried out earlier in 2025, however consultants like Ryan McBain, a well being researcher on the RAND Company, stated that the information isn’t strong sufficient to know the actual implications of most of these AI psychological well being instruments.

“If you’re going to market a product to millions of children in adolescence throughout the United States through school systems, they need to meet some minimum standard in the context of actual rigorous trials,” stated McBain.

However beneath the entire report’s information, what does it actually imply for college students to have 24/7 entry to a chatbot that’s designed to deal with their psychological well being, social, and behavioral issues?

What’s the distinction between AI chatbots and AI companions?

AI companions fall beneath the bigger umbrella of AI chatbots. And whereas chatbots have gotten increasingly more refined, AI companions are distinct within the ways in which they work together with customers. AI companions are inclined to have much less built-in guardrails, that means they’re coded to endlessly adapt to consumer enter; AI chatbots then again may need extra guardrails in place to maintain a dialog on observe or on subject. For instance, a troubleshooting chatbot for a meals supply firm has particular directions to hold on conversations that solely pertain to meals supply and app points and isn’t designed to stray from the subject as a result of it doesn’t know tips on how to.

However the line between AI chatbot and AI companion turns into blurred as increasingly more persons are utilizing chatbots like ChatGPT as an emotional or therapeutic sounding board. The people-pleasing options of AI companions can and have develop into a rising challenge of concern, particularly with regards to teenagers and different susceptible individuals who use these companions to, at instances, validate their suicidality, delusions and unhealthy dependency on these AI companions.

A latest report from Frequent Sense Media expanded on the dangerous results that AI companion use has on adolescents and youths. In line with the report, AI platforms like Character.AI are “designed to simulate humanlike interaction” within the type of “virtual friends, confidants, and even therapists.”

Though Frequent Sense Media discovered that AI companions “pose ‘unacceptable risks’ for users under 18,” younger persons are nonetheless utilizing these platforms at excessive charges.

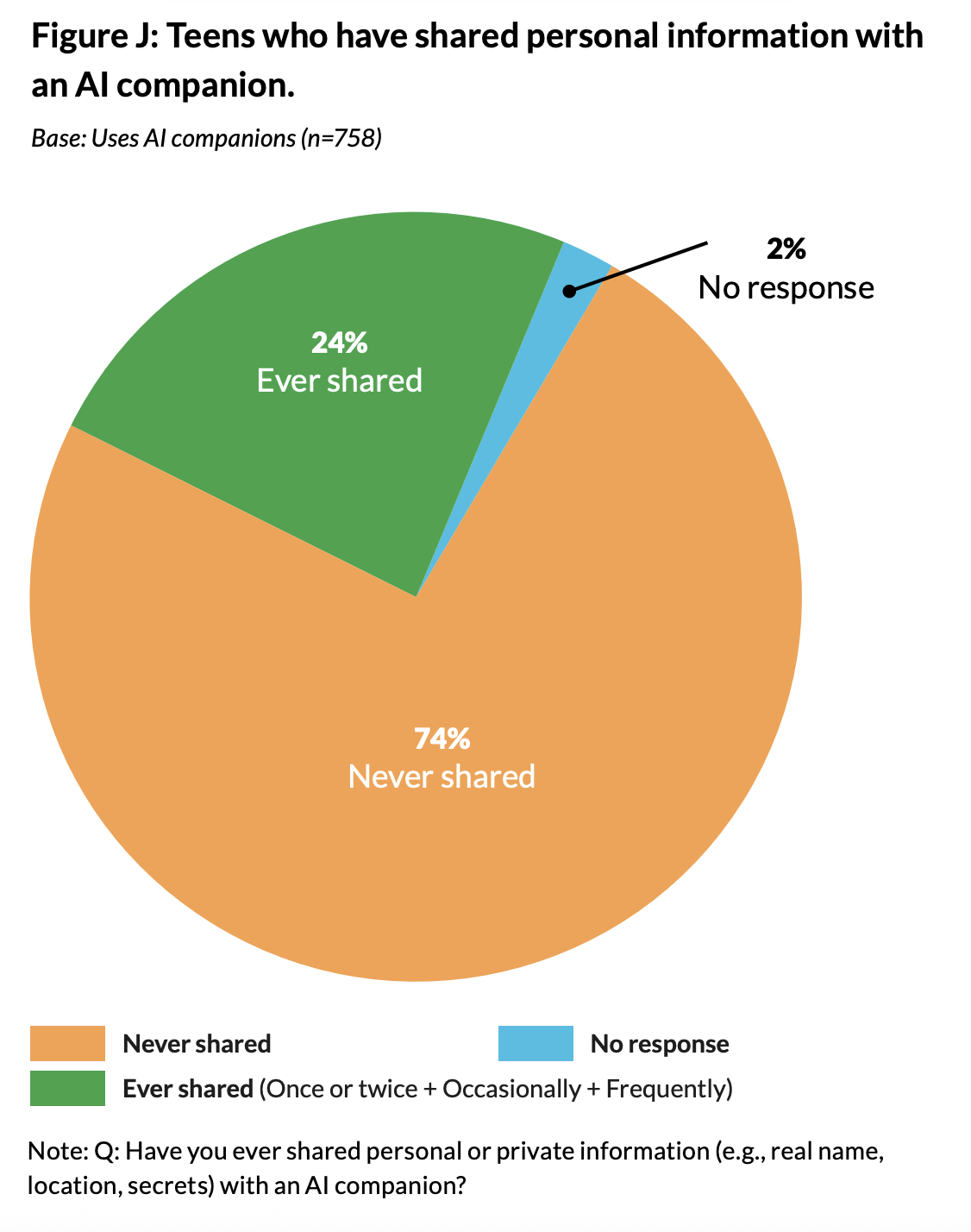

From Frequent Sense Media 2025 report, “Talk, Trust, and Trade-Offs: How and Why Teens Use AI Companions.”

Seventy two % of the 1,060 teenagers surveyed by Frequent Sense stated that that they had used an AI companion earlier than, and 52% of teenagers surveyed are “regular users” of AI companions. Nonetheless, for essentially the most half, the report discovered that almost all of teenagers worth human friendships greater than AI companions, don’t share private info with AI companions and maintain some stage of skepticism towards AI companions. Thirty 9 % of teenagers surveyed additionally stated that they apply expertise they practiced with AI companions, like expressing feelings, apologizing and standing up for themselves, in actual life.

When evaluating Frequent Sense Media’s suggestions for safer AI use to Alongside’s chatbot options, they do meet a few of these suggestions — like disaster intervention, utilization limits and skill-building parts. In line with Mehta, there’s a massive distinction between an AI companion and Alongside’s chatbot. Alongside’s chatbot has built-in security options that require a human to assessment sure conversations based mostly on set off phrases or regarding phrases. And in contrast to instruments like AI companions, Mehta continued, Alongside discourages scholar customers from chatting an excessive amount of.

One of many greatest challenges that chatbot builders like Alongside face is mitigating people-pleasing tendencies, stated Friis, a defining attribute of AI companions. Guardrails have been put into place by Alongside’s staff to keep away from people-pleasing, which may flip sinister. “We aren’t going to adapt to foul language, we aren’t going to adapt to bad habits,” stated Friis. But it surely’s as much as Alongside’s staff to anticipate and decide which language falls into dangerous classes together with when college students attempt to use the chatbot for dishonest.

In line with Friis, Alongside errs on the aspect of warning with regards to figuring out what sort of language constitutes a regarding assertion. If a chat is flagged, academics on the associate faculty are pinged on their telephones. Within the meantime the coed is prompted by Kiwi to finish a disaster evaluation and directed to emergency service numbers if wanted.

Addressing staffing shortages and useful resource gaps

At school settings the place the ratio of scholars to high school counselors is commonly impossibly excessive, Alongside acts as a triaging instrument or liaison between college students and their trusted adults, stated Friis. For instance, a dialog between Kiwi and a scholar would possibly include back-and-forth troubleshooting about creating more healthy sleeping habits. The scholar is likely to be prompted to speak to their mother and father about making their room darker or including in a nightlight for a greater sleep setting. The scholar would possibly then come again to their chat after a dialog with their mother and father and inform Kiwi whether or not or not that answer labored. If it did, then the dialog concludes, but when it didn’t then Kiwi can counsel different potential options.

In line with Dr. Friis, a few 5-minute back-and-forth conversations with Kiwi, would translate to days if not weeks of conversations with a college counselor who has to prioritize college students with essentially the most extreme points and wishes like repeated suspensions, suicidality and dropping out.

Utilizing digital applied sciences to triage well being points is just not a brand new concept, stated RAND researcher McBain, and pointed to physician wait rooms that greet sufferers with a well being screener on an iPad.

“If a chatbot is a slightly more dynamic user interface for gathering that sort of information, then I think, in theory, that is not an issue,” McBain continued. The unanswered query is whether or not or not chatbots like Kiwi carry out higher, as effectively, or worse than a human would, however the one method to evaluate the human to the chatbot can be by randomized management trials, stated McBain.

“One of my biggest fears is that companies are rushing in to try to be the first of their kind,” stated McBain, and within the course of are reducing security and high quality requirements beneath which these firms and their educational companions flow into optimistic and attention-grabbing outcomes from their product, he continued.

However there’s mounting stress on faculty counselors to satisfy scholar wants with restricted sources. “It’s really hard to create the space that [school counselors] want to create. Counselors want to have those interactions. It’s the system that’s making it really hard to have them,” stated Friis.

Alongside gives their faculty companions skilled growth and session providers, in addition to quarterly abstract stories. Loads of the time these providers revolve round packaging information for grant proposals or for presenting compelling info to superintendents, stated Friis.

A research-backed method

On their web site, Alongside touts research-backed strategies used to develop their chatbot, and the corporate has partnered with Dr. Jessica Schleider at Northwestern College, who research and develops single-session psychological well being interventions (SSI) — psychological well being interventions designed to deal with and supply decision to psychological well being issues with out the expectation of any follow-up classes. A typical counseling intervention is at minimal, 12 weeks lengthy, so single-session interventions have been interesting to the Alongside staff, however “what we know is that no product has ever been able to really effectively do that,” stated Friis.

Nonetheless, Schleider’s Lab for Scalable Psychological Well being has revealed a number of peer-reviewed trials and medical analysis demonstrating optimistic outcomes for implementation of SSIs. The Lab for Scalable Psychological Well being additionally gives open supply supplies for fogeys and professionals enthusiastic about implementing SSIs for teenagers and younger folks, and their initiative Mission YES gives free and nameless on-line SSIs for youth experiencing psychological well being issues.

“One of my biggest fears is that companies are rushing in to try to be the first of their kind,” stated McBain, and within the course of are reducing security and high quality requirements beneath which these firms and their educational companions flow into optimistic and attention-grabbing outcomes from their product, he continued.

What occurs to a child’s information when utilizing AI for psychological well being interventions?

Alongside gathers scholar information from their conversations with the chatbot like temper, hours of sleep, train habits, social habits, on-line interactions, amongst different issues. Whereas this information can provide faculties perception into their college students’ lives, it does deliver up questions on scholar surveillance and information privateness.

From Frequent Sense Media 2025 report, “Talk, Trust, and Trade-Offs: How and Why Teens Use AI Companions.”

From Frequent Sense Media 2025 report, “Talk, Trust, and Trade-Offs: How and Why Teens Use AI Companions.”

Alongside like many different generative AI instruments makes use of different LLM’s APIs — or software programming interface — that means they embody one other firm’s LLM code, like that used for OpenAI’s ChatGPT, of their chatbot programming which processes chat enter and produces chat output. Additionally they have their very own in-house LLMs which the Alongside’s AI staff has developed over a few years.

Rising issues about how consumer information and private info is saved is very pertinent with regards to delicate scholar information. The Alongside staff have opted-in to OpenAI’s zero information retention coverage, which implies that not one of the scholar information is saved by OpenAI or different LLMs that Alongside makes use of, and not one of the information from chats is used for coaching functions.

As a result of Alongside operates in faculties throughout the U.S., they’re FERPA and COPPA compliant, however the information must be saved someplace. So, scholar’s private figuring out info (PII) is uncoupled from their chat information as that info is saved by Amazon Internet Providers (AWS), a cloud-based trade commonplace for personal information storage by tech firms world wide.

Alongside makes use of an encryption course of that disaggregates the coed PII from their chats. Solely when a dialog will get flagged, and must be seen by people for security causes, does the coed PII join again to the chat in query. As well as, Alongside is required by legislation to retailer scholar chats and knowledge when it has alerted a disaster, and fogeys and guardians are free to request that info, stated Friis.

Sometimes, parental consent and scholar information insurance policies are finished by the varsity companions, and as with every faculty providers supplied like counseling, there’s a parental opt-out choice which should adhere to state and district pointers on parental consent, stated Friis.

Alongside and their faculty companions put guardrails in place to guarantee that scholar information is stored protected and nameless. Nonetheless, information breaches can nonetheless occur.

How the Alongside LLMs are skilled

One in every of Alongside’s in-house LLMs is used to establish potential crises in scholar chats and alert the mandatory adults to that disaster, stated Mehta. This LLM is skilled on scholar and artificial outputs and key phrases that the Alongside staff enters manually. And since language modifications typically and isn’t at all times straight ahead or simply recognizable, the staff retains an ongoing log of various phrases and phrases, like the favored abbreviation “KMS” (shorthand for “kill myself”) that they retrain this specific LLM to know as disaster pushed.

Though based on Mehta, the method of manually inputting information to coach the disaster assessing LLM is among the greatest efforts that he and his staff has to sort out, he doesn’t see a future during which this course of may very well be automated by one other AI instrument. “I wouldn’t be comfortable automating something that could trigger a crisis [response],” he stated — the choice being that the medical staff led by Friis contribute to this course of by a medical lens.

However with the potential for fast progress in Alongside’s variety of faculty companions, these processes will probably be very tough to maintain up with manually, stated Robbie Torney, senior director of AI applications at Frequent Sense Media. Though Alongside emphasised their means of together with human enter in each their disaster response and LLM growth, “you can’t necessarily scale a system like [this] easily because you’re going to run into the need for more and more human review,” continued Torney.

Alongside’s 2024-25 report tracks conflicts in college students’ lives, however doesn’t distinguish whether or not these conflicts are occurring on-line or in individual. However based on Friis, it doesn’t actually matter the place peer-to-peer battle was going down. Finally, it’s most vital to be person-centered, stated Dr. Friis, and stay targeted on what actually issues to every particular person scholar. Alongside does provide proactive ability constructing classes on social media security and digital stewardship.

With regards to sleep, Kiwi is programmed to ask college students about their telephone habits “because we know that having your phone at night is one of the main things that’s gonna keep you up,” stated Dr. Friis.

Common psychological well being screeners accessible

Alongside additionally gives an in-app common psychological well being screener to high school companions. One district in Corsicana, Texas — an outdated oil city located exterior of Dallas — discovered the information from the common psychological well being screener invaluable. In line with Margie Boulware, government director of particular applications for Corsicana Unbiased College District, the group has had points with gun violence, however the district didn’t have a method of surveying their 6,000 college students on the psychological well being results of traumatic occasions like these till Alongside was launched.

In line with Boulware, 24% of scholars surveyed in Corsicana, had a trusted grownup of their life, six proportion factors fewer than the common in Alongside’s 2024-25 report. “It’s a little shocking how few kids are saying ‘we actually feel connected to an adult,’” stated Friis. In line with analysis, having a trusted grownup helps with younger folks’s social and emotional well being and wellbeing, and can even counter the results of hostile childhood experiences.

In a county the place the varsity district is the largest employer and the place 80% of scholars are economically deprived, psychological well being sources are naked. Boulware drew a correlation between the uptick in gun violence and the excessive proportion of scholars who stated that they didn’t have a trusted grownup of their residence. And though the information given to the district from Alongside didn’t straight correlate with the violence that the group had been experiencing, it was the primary time that the district was in a position to take a extra complete take a look at scholar psychological well being.

So the district fashioned a process pressure to sort out these problems with elevated gun violence, and decreased psychological well being and belonging. And for the primary time, relatively than having to guess what number of college students have been combating behavioral points, Boulware and the duty pressure had consultant information to construct off of. And with out the common screening survey that Alongside delivered, the district would have caught to their finish of yr suggestions survey — asking questions like “How was your year?” and “Did you like your teacher?”

Boulware believed that the common screening survey inspired college students to self-reflect and reply questions extra honestly compared with earlier suggestions surveys the district had carried out.

In line with Boulware, scholar sources and psychological well being sources specifically are scarce in Corsicana. However the district does have a staff of counselors together with 16 educational counselors and 6 social emotional counselors.

With not sufficient social emotional counselors to go round, Boulware stated that a variety of tier one college students, or college students that don’t require common one-on-one or group educational or behavioral interventions, fly beneath their radar. She noticed Alongside as an simply accessible instrument for college students that gives discrete teaching on psychological well being, social and behavioral points. And it additionally gives educators and directors like herself a glimpse backstage into scholar psychological well being.

Boulware praised Alongside’s proactive options like gamified ability constructing for college students who wrestle with time administration or process group and might earn factors and badges for finishing sure expertise classes.

And Alongside fills an vital hole for employees in Corsicana ISD. “The amount of hours that our kiddos are on Alongside…are hours that they’re not waiting outside of a student support counselor office,” which, due to the low ratio of counselors to college students, permits for the social emotional counselors to deal with college students experiencing a disaster, stated Boulware. There’s “no way I could have allotted the resources,” that Alongside brings to Corsicana, Boulware added.

The Alongside app requires 24/7 human monitoring by their faculty companions. Which means that designated educators and admin in every district and college are assigned to obtain alerts all hours of the day, any day of the week together with throughout holidays. This characteristic was a priority for Boulware at first. “If a kiddo’s struggling at three o’clock in the morning and I’m asleep, what does that look like?” she stated. Boulware and her staff needed to hope that an grownup sees a disaster alert in a short time, she continued.

This 24/7 human monitoring system was examined in Corsicana final Christmas break. An alert got here in and it took Boulware ten minutes to see it on her telephone. By that point, the coed had already begun engaged on an evaluation survey prompted by Alongside, the principal who had seen the alert earlier than Boulware had known as her, and he or she had acquired a textual content message from the coed assist council. Boulware was in a position to contact their native chief of police and tackle the disaster unfolding. The scholar was in a position to join with a counselor that very same afternoon.